CATEGORICAL CROSS ENTROPY PLUS

It is a Sigmoid activation plus a Cross-Entropy loss. The Caffe Python layer of this Softmax loss supporting a multi-label setup with real numbers labels is available here Binary Cross-Entropy LossĪlso called Sigmoid Cross-Entropy loss. We compute the mean gradients of all the batch to run the backpropagation. For the positive classes in \(M\) we subtract 1 to the corresponding probs value and use scale_factor to match the gradient expression. As the gradient for all the classes \(C\) except positive classes \(M\) is equal to probs, we assign probs values to delta. In the backward pass we need to compute the gradients of each element of the batch respect to each one of the classes scores \(s\). If labels != 0 : # If positive classĭelta = scale_factor * ( delta - 1 ) + ( 1 - scale_factor ) * delta bottom. diff # If the class label is 0, the gradient is equal to probs Backward pass: Gradients computationĭef backward ( self, top, propagate_down, bottom ): delta = self. We then save the data_loss to display it and the probs to use them in the backward pass. The batch loss will be the mean loss of the elements in the batch. We use an scale_factor (\(M\)) and we also multiply losses by the labels, which can be binary or real numbers, so they can be used for instance to introduce class balancing. Then we compute the loss for each image in the batch considering there might be more than one positive label. We first compute Softmax activations for each class and store them in probs. log ( probs ) * labels * scale_factor # We sum the loss per class for each element of the batchĭata_loss = np. count_nonzero ( labels )) for c in range ( len ( labels )): # For each class num, 1 ]) # Compute cross-entropy lossįor r in range ( bottom.

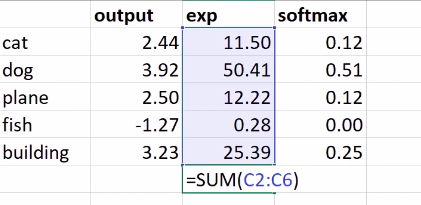

sum ( exp_scores, axis = 1, keepdims = True ) logprobs = np. max ( scores, axis = 1, keepdims = True ) # Compute Softmax activationsĮxp_scores = np. It is used for multi-class classification.ĭef forward ( self, bottom, top ): labels = bottom. If we use this loss, we will train a CNN to output a probability over the \(C\) classes for each image. It is a Softmax activation plus a Cross-Entropy loss. Is limited to binary classification (between two classes).Īlso called Softmax Loss. Is limited to multi-class classification (does not support multiple labels).

See next Binary Cross-Entropy Loss section for more details. That is the case when we split a Multi-Label classification problem in \(C\) binary classification problems. \(t_1\) and \(s_1\) are the groundtruth and the score for \(C_1\), and \(t_2 = 1 - t_1\) and \(s_2 = 1 - s_1\) are the groundtruth and the score for \(C_2\). If you want to make sure at least one label must be acquired, then you can select the one with the lowest classification loss function, or using other metrics.Where it’s assumed that there are two classes: \(C_1\) and \(C_2\). Thus, we can produce multi-label for each sample. Each binary classifier is trained independently. If you have 10 classes here, you have 10 binary classifiers separately. You can just consider the multi-label classifier as a combination of multiple independent binary classifiers. In the case of (3), you need to use binary cross entropy.In the case of (2), you need to use categorical cross entropy.In the case of (1), you need to use binary cross entropy.Multi-label classification: just non-exclusive classes.Multi-class classification: more than two exclusive classes.Binary classification: two exclusive classes.There are three kinds of classification tasks:

0 kommentar(er)

0 kommentar(er)